So its been a little while since my last post, just simply with work, research and life taking over. Yes there was some downtime as well, seeing the excellent Truck Fighters in London with my son was time well spent !

Outside of work and having fun, I’ve been very busy on the research front, so lets get started! If your going to read it all now, grab a cup of tea now then scroll and click the links, its a 3 week update with alot of off-site content to read if all the links are followed 🙂

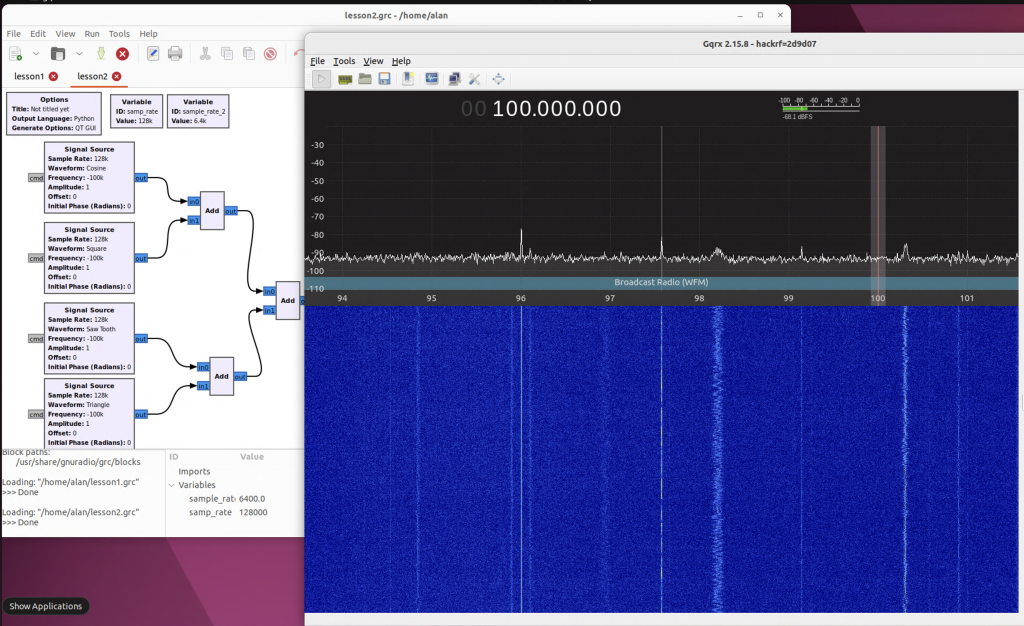

GNU Radio + GQRX

The main GPU Server to which the SDR and data collection is carried out on is running Ubuntu Jammy 22.04 – the reason for this is compatibility for CUDA and using the GPU via the many frameworks, I would run a later version of Ubuntu, but haven’t seen that running CUDA and ML/DL frameworks on anything newer is reliable.

As is I ran into a issue of running GQRX and GNU Radio from the packaged tools, they refused to run, despite being in the same repo. The issue was the gnuradio run time library, the fix that worked for me was to sym-link the library so both apps could run.

ln -s /usr/lib/x86_64-linux-gnu/libgnuradio-runtime.so.3.10.1 /usr/lib/x86_64-linux-gnu/libgnuradio-runtime.so.3.10.7I’ve not check all the details between .10.7 and .10.1 but GQRX and GnuRadio now work perfectly.

I am thinking of adding another SDR so I can collect other signals from the vast amount of antennas I have available at my house, whilst I wont use that data for my research, I can compare the SDR radio functions as to the output received in GQRX.

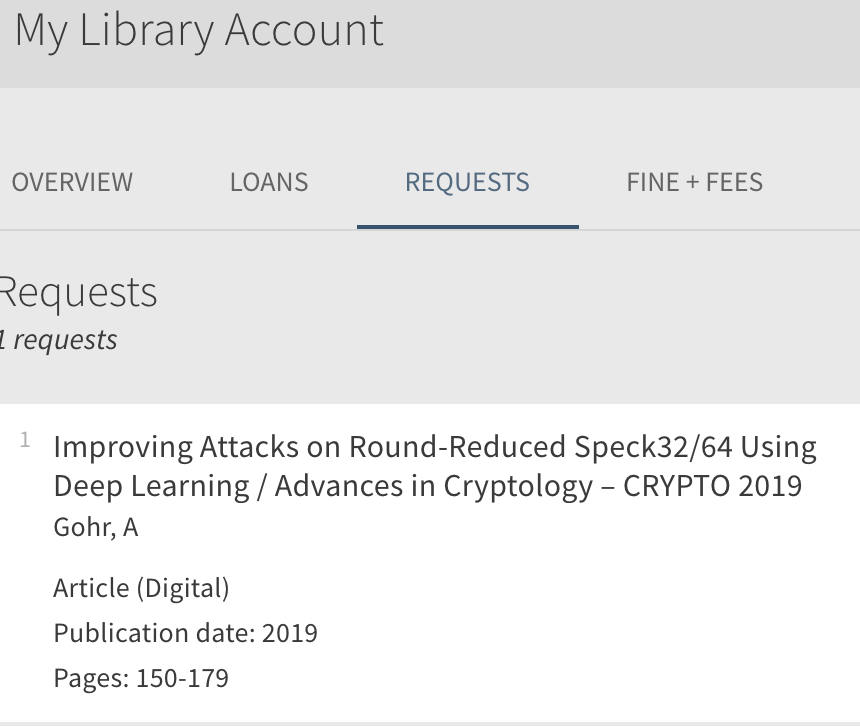

Using Bangor Library Request Service

There is a paper which many other Cryptanalysis articles reference. I’ll put the LaTeX reference, as that is what I imagine most researchers are using for referencing.

@inproceedings{gohr2019improving,

title={Improving attacks on round-reduced speck32/64 using deep learning},

author={Gohr, Aron},

booktitle={Advances in Cryptology--CRYPTO 2019: 39th Annual International Cryptology Conference, Santa Barbara, CA, USA, August 18--22, 2019, Proceedings, Part II 39},

pages={150--179},

year={2019},

organization={Springer}

}

I was unable to find this publication on the Library access portal and public resources, so I raised a ILL request via https://library.bangor.ac.uk/, I had to provide details from the LaTeX reference into the request, and within 2 (working) days, the paper had been supplied to me for my review.

If you are a firefox user an excellent plugin to use that comes recommended from Bangor University Library Services is Lean Library – this plugin loads in your browser and when searching for articles (if you setup the google/IEEE alerts, even more so easier) that sees if the article can be downloaded via Bangor University login. It only needs configuring once, but it is excellent in being able to get hold of publications that are otherwise unavailable via public access. For things which arent available via download, the ILL (Inter Library Loan) request is the way to go !

I’ve so far in 2 months of building up a catalogue of papers I have read 11 publications on Cryptanalysis, I will re-read them when it comes to building up my Literature Review section. I’m hoping I will have at least 50-60 articles read by the time my first year review comes up, but all the tools and automation are very much helping me towards that goal !

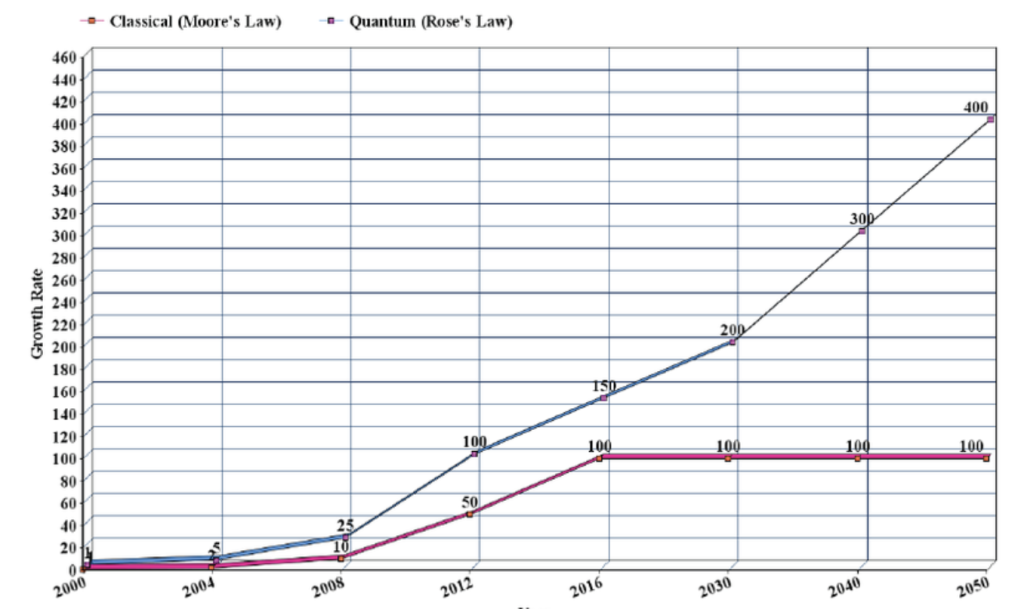

Quantum Computing

Quantum Computing is important to all of computing, because it will change the dynamic of what a computer will be able to do. Rose’s Law is similar to Moore’s Law, but with a focus on Quantum, Whilst we know that the amount of computing power and chips is linear, the amount of ‘qbits’ and processing power on Quantum Computing will outperform classic computing, this prediction is given in Atta Rahman work as shown below.

The milestone to get 10,000 QBits is now in reach, and as explained by the excellent Sabine Hossenfelder, this milestone is now becoming a realistic goal.

For me as a Cryptanalysis researcher, the possibility of being able to train and run models on a quantum computer will undoubtedly change the security requirements we take for granted today. Encryption routines that would of taken hours, if not days, to train on classic computing GPU’s could turn into minutes.

I set about researching how I could get into Quantum Computing myself, the first step was to find the quantum emulators I could run on classic computing, in this case I went for Intel-QS from IBM. All of the source code and installation instructions are available on github. I spent several hours attempting to get this to run on my ‘bare metal’ and python venv, but to no avail, my disappointment in not getting the ‘local’ environment to run, soon turned into something much better ! Actually being able to use IBM’s quantum computer !

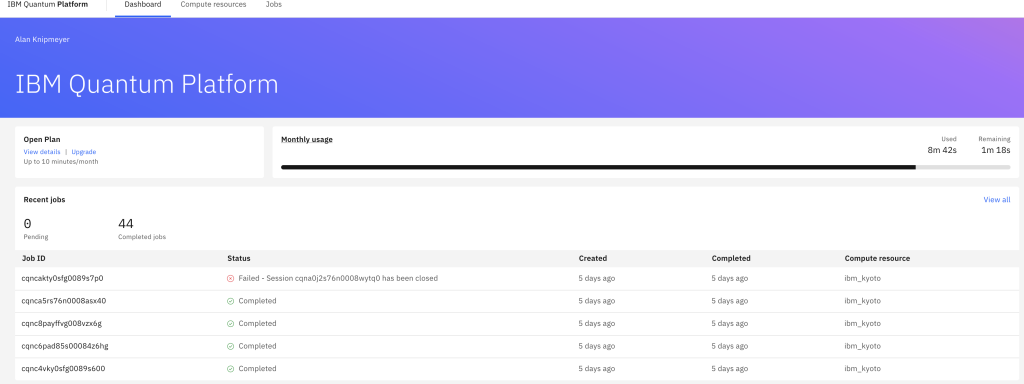

I created the necessary account on IBM’s quantum computing website, then was presented with a very slick dashboard.

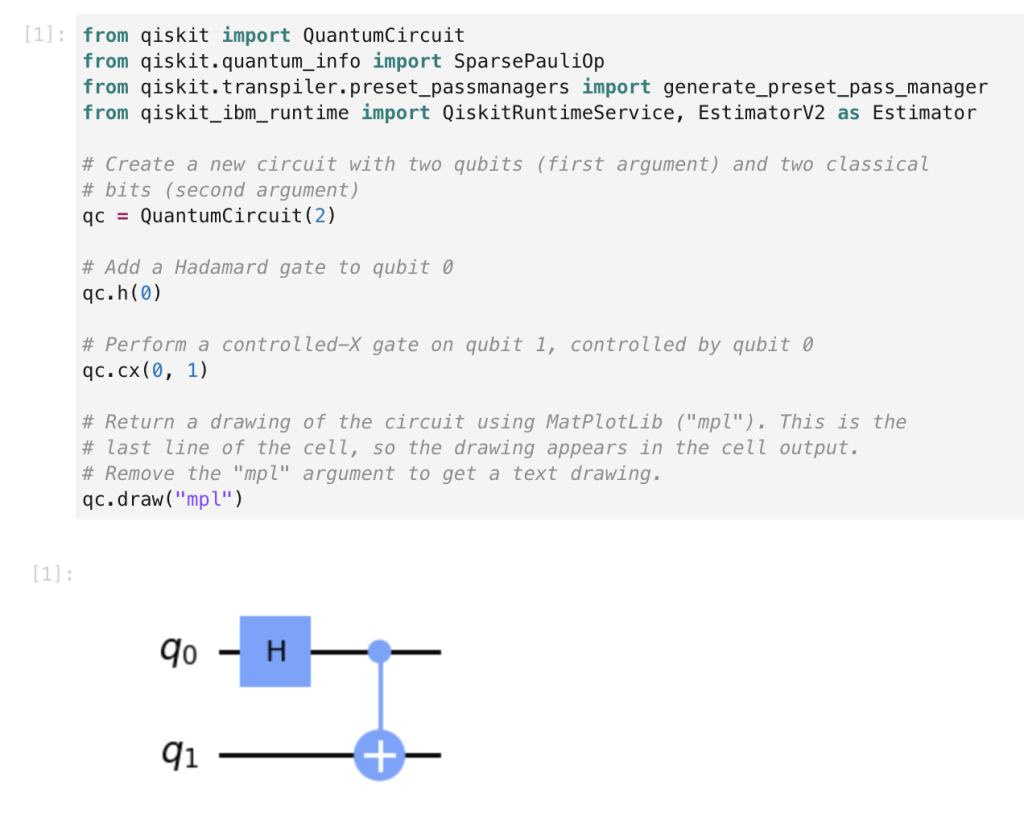

Quantum is very new to me, but I was largely able to following the labs via the provided Juypter Notebooks which ran on IBM’s Quantum Lab

I was able to go thru most of the ‘getting to know’ the environment and advance my knowledge of Quantum Computing. There is of course a caveat to this, the ‘free’ tier provides 10 minutes of quantum processing time a month, and doing these tutorials took 8 minutes of that processing time !

I can how ever still read the documentation, which whilst I find heavy going and will need to read/review several times at least, is all available online and free !

Do check the pricing at IBM Quantum and do pay attention to the Quantum Dashboard for minutes left/used per month.

I will continue to read publications on Quantum computing, and understand more about them, I’m sure it will at least influence my research, or maybe become part of it (If IBM offer academic access to Quantum, that would be nice !) Update – they do ! here

Apache TVM

So to go from the high end computing, we now look at Apache TVM. What is Apache TVM I hear you say ? Well, in laymans terms it allows the use of many ML/DL frameworks on many hardware types. This is significant as it includes non-gpu based hardware. Yes, Tensorflow, Keras,

I’m very interested in TVM as it allows the possibility to enhance ML and reduce the time it takes to train models which provide accurate results. If this can be done on devices as found on the Internet of Things (IoT), then this is a very big game changer. In my lab, my target board is a Rpi3b running Strongswan and various cryptographic routines, the GPU System captures these traces and with pre-processing the signals are isolated for the cryptographic routines. If this could all be run on the RPI3b with TVM, or another RPI3b (for example the other end of the IPsec Gateway, or the Management host running Wireshark), then the time to break keys could be greatly reduced on very limited cut down hardware.

I started by following the install documentation here which was sufficient in being able to get up and running. I followed the Python for Developers process on the baremetal GPU server (no conda, no venv). I gave the Docker solution a look, but ran into issues and didn’t want to spend time on running on a container until I got it working locally first (as I’m old skool like that, i like compiling stuff and optimizing where i can).

The significant file for building TVM is the tvm/build/config.cmake, I’ll include the current changes I made below to run on CUDA and to be able to use the GPU

set(USE_CUDA ON)

set(USE_RPC ON)

set(USE_GRAPH_EXECUTOR ON)

set(USE_LLVM ON)

set(USE_RANDOM ON)I followed the tvmc command line tutorial, this got me up and running, i did create an alias for tvmc in my .bashrc

alias tvmc='python -m tvm.driver.tvmc'

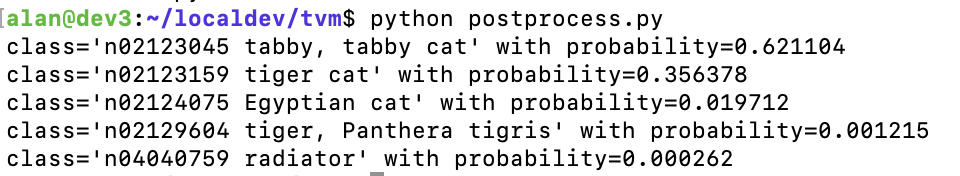

Following the tutorial I was amazed at how fast TVM ran and then identified the cat. Whilst I compiled in the CUDA options, this was run against the native CPU.

I’m very excited by TVM and I will be spending alot more of my research time getting to understand it even more.

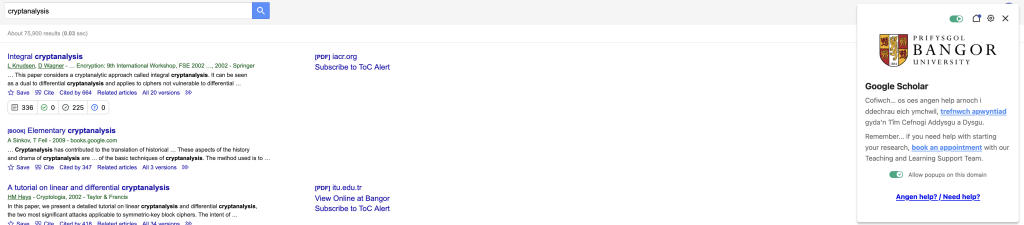

Publication Review

Learning And Experiencing Cryptography with Cryptool and SageMath

Bernhard Esslinger (2024)

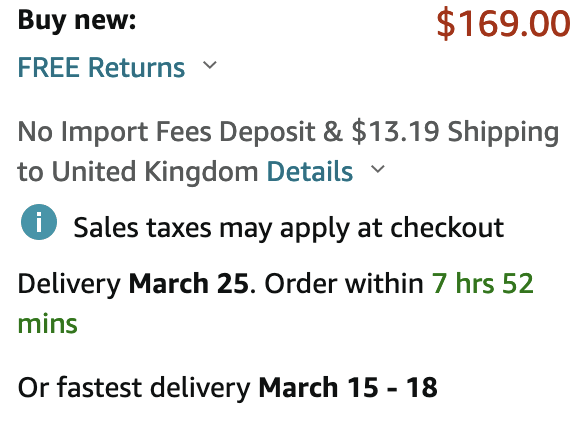

One of the links found by google scholar was title of this section. Searching for the book, I headed to Amazon, being based in the UK, used their UK Website. The earliest delivery date for the book was May, possibly until September !

Having read the sample chapters on line, I was really eager to get this book, so I headed to Amazon.com

I ordered via Amazon.com America and was amazed to receive my book in under a week. Yes, this was not a cheap book, but I was very excited to receive it and start reading thru. I will give my feedback so far up to the Chapter 1

Firstly, the binding and quality of this book is very high. The book will last a long time if cared for properly. The paper is heavy, yet smooth to the touch, with a clear well set typeface and so far very well laid out pages. I wear my reading glasses to make this a enjoyable reading experience. Now we have got over the ‘physical’ what about the contents.

The initial pages list all the required information for citation, although I would use the LaTeX from Google Scholar, the table of contents is clear and navigating to a specific section is made easy. I know this book will require several times of reading, especially when I come across a specific point to put in my literature review, so this clear chapter and page guide will save on putting post-it notes through out the book, or worse still *writing on it* (I use a physical journal for notes, I only ever write in HB pencil the date I received the book in the book, and that’s it !)

The preface captures the need for the book and how each chapter advances the knowledge of the reader. I particularly like this form of structure (its similar to a thesis/dissertation introduction) and makes it easy for me to understand what this book will show me.

The Acknowledgement section should not be overlooked, not only does it give credit to the people who helped write the various sections of the book, it gives the read the opportunity to go and find publications by these authors also.

The Introduction chapter goes on to describe each tool and how it is used, giving me the reader confidence that I can run all these applications and following along with the writer. It seems for me I will use a mix of MacOS and Linux (Ubuntu), depending on if a GUI is required. I have RDP access to the GPU Environment desktop, as you would of seen from various screenshots I provide, so it will be good to try the tools on both and see how well they perform on the various platforms.

I shall be reading Chapter 1 this week and provide regular updates in the blog as to how the book is going, in particular any problems I run into and how I overcome them, I donat suspect there will be many, but Operating System changes and patching will often outstrip the documentation provided in physical press mediums.

Well done !

Well done if you made it this far, I really appreciate people that read the blog, and I hope you enjoy reading it as much as I enjoy writing it. I will soon open up the comments section when I have worked out the best way to implement it as I will need some method of verification to stop ‘bots’ and keeping the audience / people providing input to a relevant group.

Until the next posting (which will hopefully be sooner than 3 weeks) I wish you all well.