So its been a busy couple of weeks, with writing up and collating information for my first Post Graduate Review (PGR) and actually being going to Bangor for training seminars, but more on that in a few weeks or so when all the sessions are completed !

Today I’d like to focus on a new to me method of running and install Kubernetes on Ubuntu. Many starting with Kubernetes will use, and stick to microk8s and it has the ability to use GPU via a single command, however I am always keen to try out something new and this week I will focus on kind

What is kind and why use it over microk8s /others ?

MicroK8s is excellent, but it can get quite technical, whilst that is not objectionable to me, I like to focus on actually building distributed container images that can work Kubernetes easily, i.e. CI/CD and MLOps methodologies. What I ‘lose’ on building a ‘production like’ Kubernetes environment, I recoup in time focused on Machine Learning and the ability to use GPU’s easily. For a deeper comparison, I can recommend the page put together by Alperen on ‘Simple Comparison of Lightweight K8S Implementations‘.

Getting started with Kind

I stated out with a vanilla install of Ubuntu Server 22.04 and installed the NVIDIA Drivers via the apt repository method. This did put me on a slight older version of CUDA, but sufficient enough to install Docker with GPU support. Documentation for this step is on NVIDIA’s website here, I didn’t encounter any issues following the steps provided.

sudo docker run --rm --runtime=nvidia --gpus all ubuntu nvidia-smi

Sun Jun 2 22:49:42 2024

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.239.06 Driver Version: 470.239.06 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 Off | N/A |

| 0% 48C P8 14W / 184W | 0MiB / 12021MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+The prerequisite to install Kind is to have Go-lang installed, this was easily done using the Ubuntu package management apt, this page was sufficient to install go and without any other dependencies.

As I was just wanting to get this up and running, I used root for now and will update modify later, but the steps should be the same for a regular user account. Install Kind was as easy os typing a single command, its worth checking to see on the kind repository what is the latest version, as of writing its 0.23.0

PATH=/root/go/bin:$PATH

go install sigs.k8s.io/kind@v0.23.0

kind create cluster

On the ‘dev’ machine I have here it only took a few moments for kind to build all the necessary components to build a fully functioning Kubernetes system. I tested this out with the standard ‘hello world’ docker containers. Next I wanted to build with GPU support with the NVIDIA runtime support, on a regular Kubernetes cluster, this is quite an involved process, as documented here.

Once I had tested that a ‘standard’ kind Kubernetes install worked correctly, I set about getting the GPU support working. This was far easier than the lengthy process in setting up a Kubernetes cluster on bare-metal with GPU support, I required to install ‘helm’ to load the charts for the GPU. The full installation instructions are provided here

kind create cluster --name substratus --config - <<EOF

apiVersion: kind.x-k8s.io/v1alpha4

kind: Cluster

nodes:

- role: control-plane

image: kindest/node:v1.27.3@sha256:3966ac761ae0136263ffdb6cfd4db23ef8a83cba8a463690e98317add2c9ba72

# required for GPU workaround

extraMounts:

- hostPath: /dev/null

containerPath: /var/run/nvidia-container-devices/all

EOF

This process took a few moments and I did run into some issues with “Too Many Open Files”. This was quickly resolved by following the Ubuntu and Kubernetes fixes for these errors. With a reboot my Kubernetes node was running with GPU support !

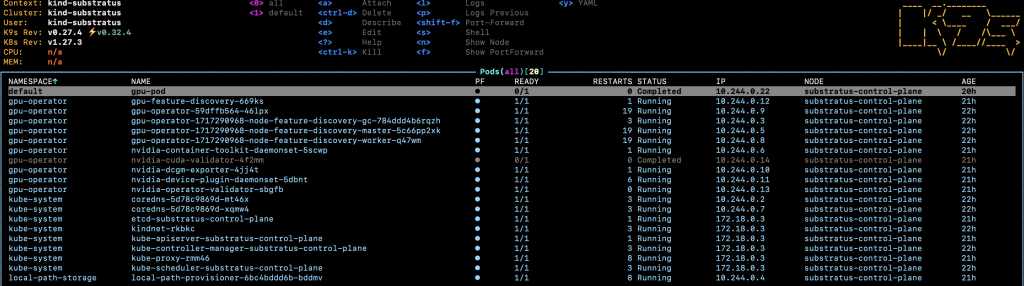

One of my favorite tools I have been introduced to in the last year is k9s, whilst I wont do a deep dive on all the functions it has, I can strongly recommend installing it. I used the ‘snap’ package manager to install k9s

snap refresh k9s --channel=latest/stableIt was then super easy to startup k9s and navigate my installation.

Testing GPU Support within Kubernetes

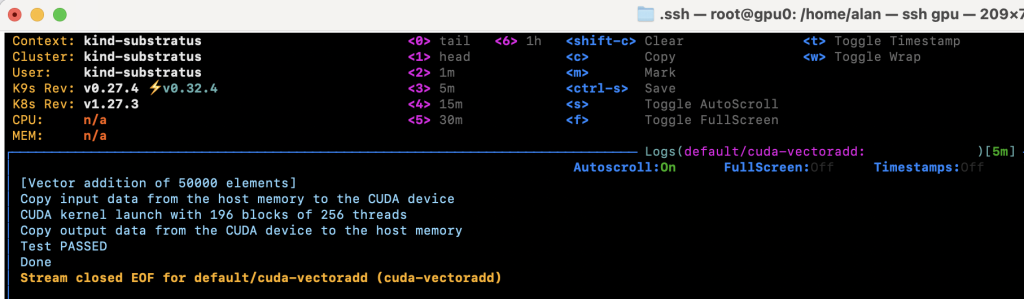

Whilst my version of CUDA is slightly older, I was able to run a previous version of vectoradd

kubectl apply -f - << EOF

apiVersion: v1

kind: Pod

metadata:

name: cuda-vectoradd

spec:

restartPolicy: OnFailure

containers:

- name: cuda-vectoradd

image: "nvcr.io/nvidia/k8s/cuda-sample:vectoradd-cuda11.2.1-ubuntu18.04"

resources:

limits:

nvidia.com/gpu: 1

EOF

Conclusion

This was by far the easiest way I have experienced of running Kubernetes with GPU support on Ubuntu, whilst I’m not sure I would use this on a production environment, it works for me as a MLOps/DevOps engineer that wants to focus on developing Cryptanalysis containers to run Kubernetes, therefore if you want such an environment, I can really recommend it !